I have been working on leveraging edge computing to identify “intent”. I think that most folks would agree that collecting data from video, audio and biometric streams has value. What I have found is that most of the data is reviewed after the event has happened. Read about a crime — “law enforcement is reviewing the data”. By the time the data is reviewed, the damage is done – often fatal damage.

I needed a platform. A platform that I could process multiple video, audio and biometric streams.

Started with the old friend – Raspberry Pi.

Not bad – good but only single stream for video, audio, and biometrics.

Tried Nvidia Tx2.

Better. More channels but not fast enough.

Tried NVidia Xavier.

Best. Dozen channels. Not maxed out. 32 TeraOps.

Next step is to integrate Nvidia platform to Azure IOT. Here is a short video from Microsoft.

At first blush, seems pretty straight forward.

This solution works when a second here or a second there is acceptable.

When loss of life, and or bodily injury is an outcome, a 100 or 200 milli-seconds are the difference between going home or being a statistic being reviewed days later by law enforcement.

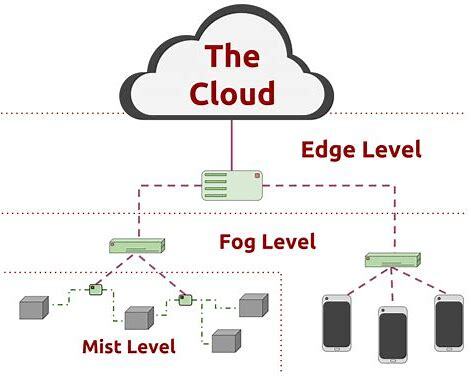

Enter the Fog. First there is the Cloud dense with resources and services. Then there is the Fog – less dense with resources and services. And then there is the Mist – collectors and actuators.

One way to think about the Fog as an architecture is to think about Hadoop.

“Apache Hadoop ( /həˈduːp/) is a collection of open-source software utilities that facilitates using a network of many computers to solve problems involving massive amounts of data and computation. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use.[3] It has since also found use on clusters of higher-end hardware.[4][5] All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework.[6]

The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster. It then transfers packaged code into nodes to process the data in parallel. This approach takes advantage of data locality,[7] where nodes manipulate the data they have access to. This allows the dataset to be processed faster and more efficiently than it would be in a more conventional supercomputer architecture that relies on a parallel file system where computation and data are distributed via high-speed networking.[8][9]“

The key term is “data locality”. With Hadoop, compute resources co-exist with the data. Data is not collected from a remote location. The goal is an answer in the shortest amount of time. Hadoop – the Fog – same idea. Getting answers quicker.

“In 2011, the need to extend cloud computing with fog computing emerged, in order to cope with huge number of IoT devices and big data volumes for real-time low-latency applications.[5] Fog computing, also called edge computing, is intended for distributed computing where numerous “peripheral” devices connect to a cloud. The word “fog” refers to its cloud-like properties, but closer to the “ground”, i.e. IoT devices.[6] Many of these devices will generate voluminous raw data (e.g., from sensors), and rather than forward all this data to cloud-based servers to be processed, the idea behind fog computing is to do as much processing as possible using computing units co-located with the data-generating devices, so that processed rather than raw data is forwarded, and bandwidth requirements are reduced. An additional benefit is that the processed data is most likely to be needed by the same devices that generated the data, so that by processing locally rather than remotely, the latency between input and response is minimized. This idea is not entirely new: in non-cloud-computing scenarios, special-purpose hardware (e.g., signal-processing chips performing Fast Fourier Transforms) has long been used to reduce latency and reduce the burden on a CPU.

Fog networking consists of a control plane and a data plane. For example, on the data plane, fog computing enables computing services to reside at the edge of the network as opposed to servers in a data-center. Compared to cloud computing, fog computing emphasizes proximity to end-users and client objectives (e.g. operational costs, security policies,[7] resource exploitation), dense geographical distribution and context-awareness (for what concerns computational and IoT resources), latency reduction and backbone bandwidth savings to achieve better quality of service (QoS)[8] and edge analytics/stream mining, resulting in superior user-experience[9] and redundancy in case of failure while it is also able to be used in Assisted Living scenarios.[10][11][12][13][14][15]

Fog networking supports the Internet of Things (IoT) concept, in which most of the devices used by humans on a daily basis will be connected to each other. Examples include phones, wearable health monitoring devices, connected vehicle and augmented reality using devices such as the Google Glass.[16][17][18][19][20] IoT devices are often resource-constrained and have limited computational abilities to perform cryptography computations. A fog node can provide security for IoT devices by performing these cryptographic computations instead.[21]…”

In my solution, where seconds count, in the edge, I have Nvidia Xavier platforms running models to detect threats leveraging both video and audio. Most of the time, threats do not come walking into a store or knock on your front door. When a threat is detected, to minimize false positives, I forward the frames to a server in the Fog to do a deeper analysis of the frames. I don’t want to flag someone with a credit card as having a gun. If the server in the fog agrees that the frames contain a valid threat, an alarm is sent to the location of the sensors. Two to three seconds is acceptable from detection to alarm, far faster and more consistent than a human monitoring the sensors.

Discover more from Threat Detection

Subscribe to get the latest posts sent to your email.