An introduction to Kafka – after a decade — a trusted friend. This is going to be both a very high level introduction to Kafka and an introduction to how OverVotch leverages Kafka to provide a highly scalable, high performant, redundant, and secure platform. While all of these attributes are available in cloud environments, Kafa combined with edge devices provides near real time performance at a very low cost — not possible on any cloud platform.

For those wanting a reference that explains Kafka and has clear explanations and code examples on how to get the best from Kafka, I recommend “Kafka The Definitive Guide” – available on Amazon.

For those wanting the 30 second over view:

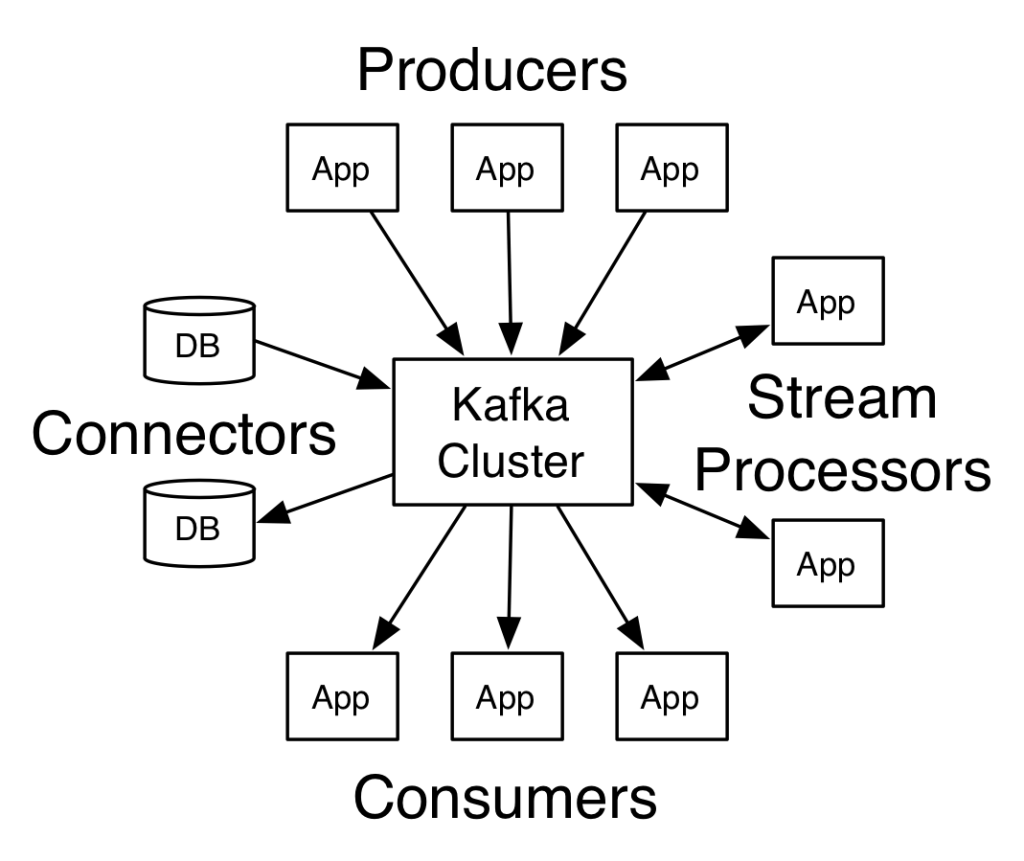

The heart of Kafka is the concept of producers and consumers. One of the beauties of Kafka is its simplicity. Understanding just some Kafka basics, problems can be solved.

A producer is an application, service, etc. that posts a request. The request can be about saving, updating or entering new data to be stored some place. The producer can send a request for a task to be accomplished.

There is the concept of a topic. I think of topics as filters. If an application wants to identify objects in a stream of images, the producer writes to a topic called “identify object”. The producer has no idea where there is a consumer that will satisfy the request. The producer simply wants a response. Very powerful concept.

Somewhere, there is what is called a Kafka Cluster. A cluster is a set of hardware/software that understands topics. When a producer sends a message to a topic, the cluster takes the request, and saves the request.

The opposite of a producer, is a consumer. A consumer understands what it is capable of doing. If a consumer can “identify an object”, the consumer sends a request to the “identify object” task in the cluster. The consumer takes the request from the topic, applies a machine learning model to identify objects in the image and satisfies requests.

The first image in the post shows a teacher, students, and a blackboard. Think of the teacher as being a producer, the blackboard being a Kafka Cluster, and the students being consumers. A teacher writes writes a French phrase to the blackboard. The teacher has produced a request – translate the French phrase. One of the students understands the phrase written to the blackboard, translates the phrase and satisfies the task.

Kafka is not unique in solving problems like the ones mentioned above. Another phrase, “Pub/Sub” – pretty much a synonym for Producer/Consumer –is supported in both the cloud and the enterprise. But Kafka, Pub/Sub, etc. services alone are not effective in solving a set of problems requiring near real time responses. Case in point:

I have seen a number of systems that can monitor a camera streaming video, can classify a violent event, and issue an alarm. The biggest issue that I see is time. The difference between sub second and seconds taken to determine a violent event is the difference between surviving the event, or as a minimum being violently beaten. When monitoring is done remotely, the classification of the event, monitoring in the enterprise or the cloud , does not take advantage of local resources.

For decades, Information Technology was about the enterprise. Data Centers could be placed strategically around the globe. Need more resources, buy and configure more resources. Cloud vendors identified a market – the fact that many enterprise resources were often idle and took significant time to purchase and configure. The cloud is born. Dynamically expand and contract. Big savings in time and money. But in less than a decade, the clouds are found to be a constrained solution.

There are billions of sensors in the world – pick a number – a very big number. Desktops and lap tops now come with Tera-flop performance. Cell phones have compute power that only a few years ago was thought to only be present in super computers. Companies such as Intel, Nvidia,etc. provide small off the shelf devices that are capable of hundreds of Tera-flops. Some performance numbers for off the shelf edge devices can be found here. Having worked with some of these devices, I can attest to what is possible. Applying Torch, and edge devices, it is realistic to be able to monitor camera streams in 30 to 60 ms, collect a minimum of seven frames and determine intent.

Drive down any block in the suburbs and think about the enormous amount of compute resources sitting idle. Drive by a furniture store and think about the compute resources in the accounting department sitting idle. Fill up at a gas station and think about the desktop collecting and storing video streams. Then think about enabling those edge resources to classify, detect and determine intent in the time that it takes most humans to take one or two breaths.

When I was young, I use to read books about how technology was going to make life better. A driving force in my decision to pursue engineering — produce solutions that made life better. OverVotch is about applying technology to make lifer safer in an ever increasing dangerous world.

Next up, I will write about how edge devices leverage the cloud to make a safer world.

Discover more from Threat Detection

Subscribe to get the latest posts sent to your email.